Natural Language Processing on IPUS

Graphcore IPUs, built specifically for AI, are the ideal cloud compute for training, fine-tuning and deploying NLP applications quickly and efficiently.

Whether you are an AI Saas company focused on NLP-based platforms like intelligent chatbots or delivering real-time insights from customer service conversations or an enterprise exploring more efficient Large Language Models (LLMs) like GPT, read on to find out more.

Jump to ResourcesTransforming Industries

Applications with Natural Language Processing (NLP) models at their core are deployed by companies around the world to boost productivity, provide faster business insights, save money, increase security and reduce fraud, improve customer retention and overall business competitiveness.

Use cases include intelligent chatbots, sentiment analysis in finance, real time insights for customer service, content moderation in social networks, fraud detection in financial services, protein and genome analysis in drug discovery, content generation in marketing and translation, text summarisation of news and social media feeds and much more.

UNDERSTANDING Language with Aleph Alpha

Graphcore and Aleph Alpha are working together to research and deploy the next generation of multi-lingual Large Language Models (LLMs) on current & next-generation IPU systems. Applications include conversational platforms for more intelligent and efficient Q&A in chatbots and advanced semantic search for knowledge management systems with an interface that resembles asking questions to a human expert than entering keywords into a search engine.

Learn more

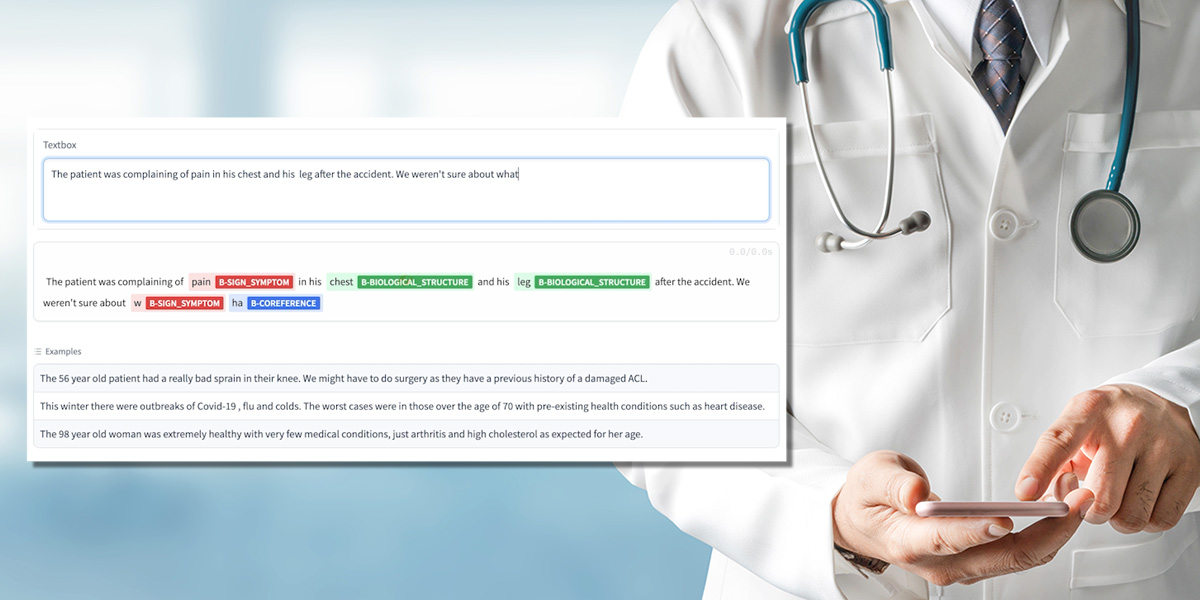

Intelligent Text Analytics for accurate insights

Graphcore partner, Pienso, delivers a machine learning platform, based on IPU-powered NLP models, to help enterprises understand text data better than ever before. Customer service teams use the low code/no code Pienso service to generate insight, inform strategy, and inspire action, investment firms use Pienso to monitor the news and social feeds to inform investment strategies and social media groups have access to highly intelligent and easy to use content moderation tools.

Learn more