For more performance results, visit our Performance Results page

IPU-POD16 opens up a new world of machine intelligence innovation.

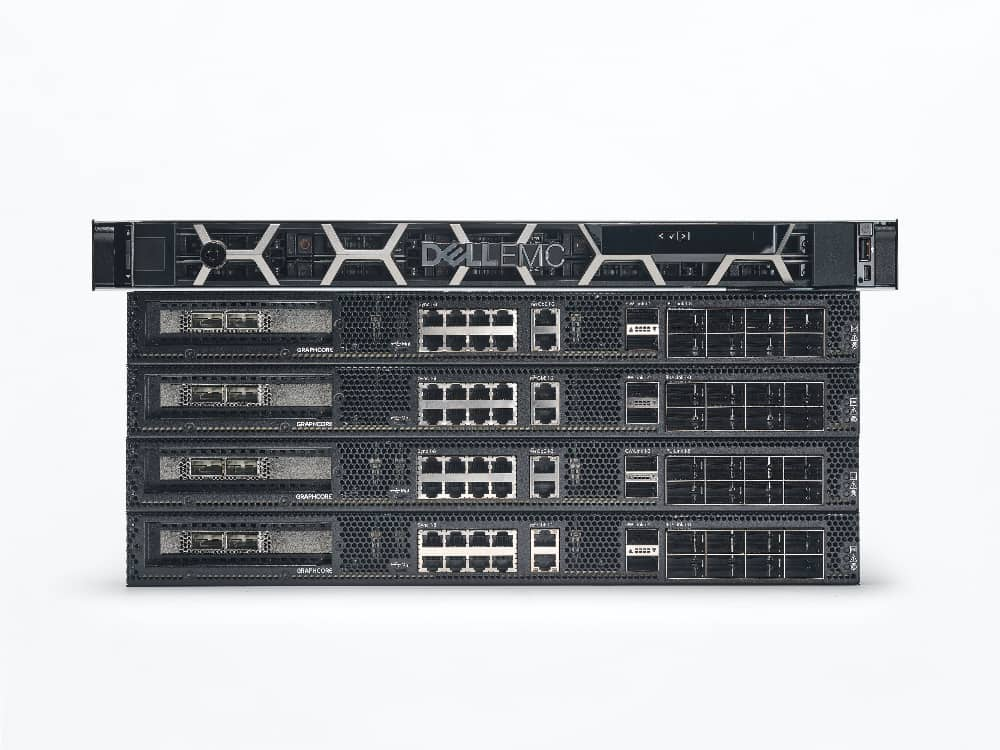

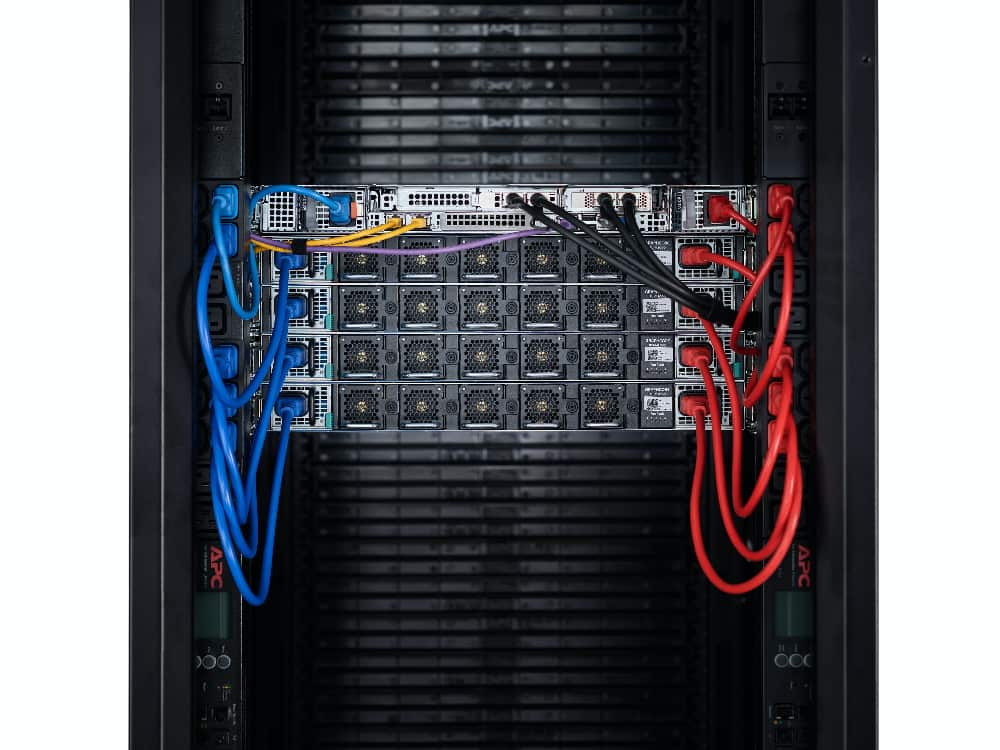

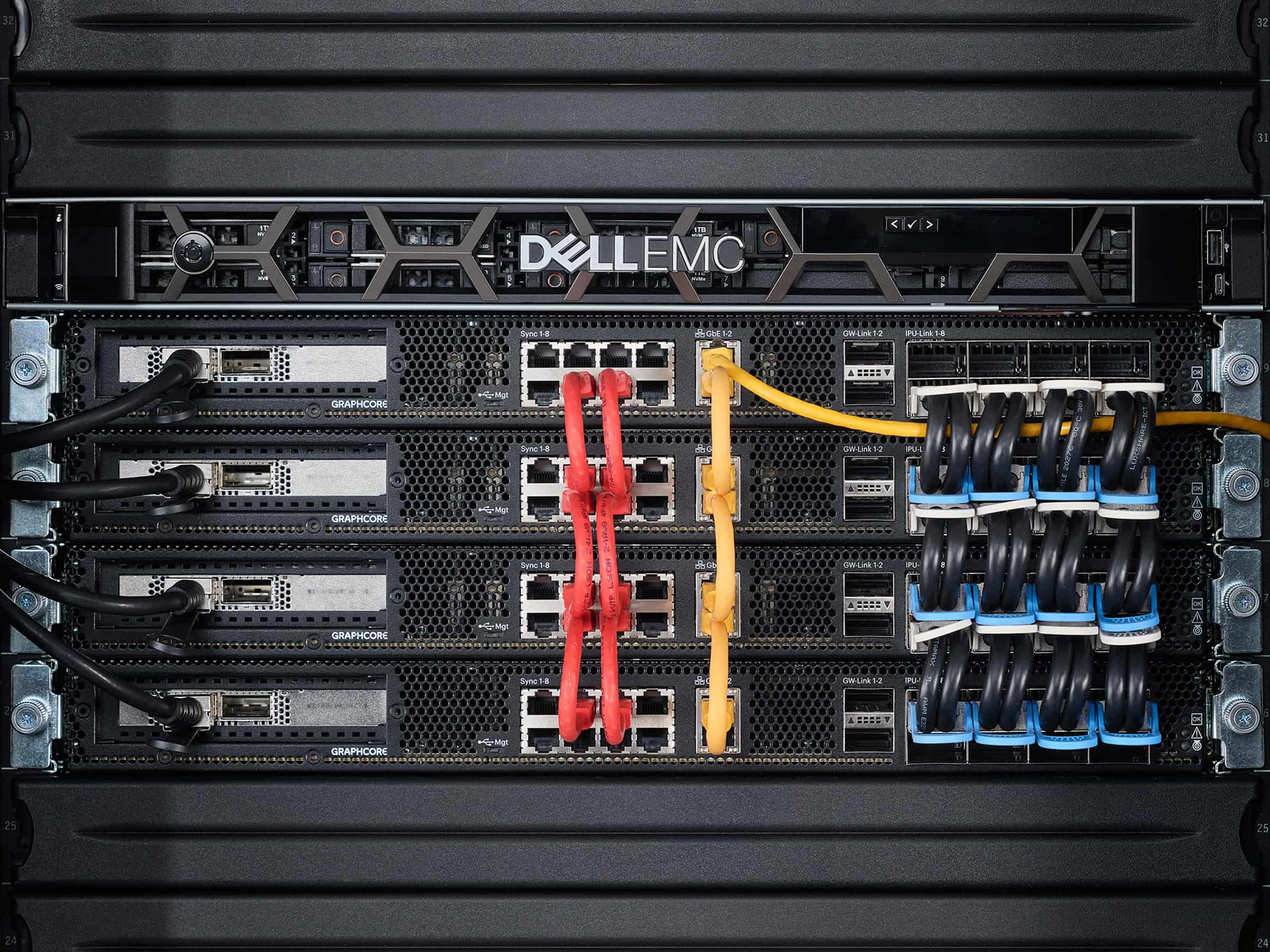

Built from 4 inter-connected IPU-M2000s and a pre-qualified host server from your choice of leading technology brands, IPU-POD16 is available to purchase today in the cloud or for your datacenter from our global network of channel partners and systems integrators.

Performance

World-class results whether you want to explore innovative models and new possibilities, faster time to train, higher throughput or performance per TCO dollar.

| IPUs | 16x GC200 IPUs |

| IPU-M2000s | 4x IPU-M2000s |

| Memory | 14.4GB In-Processor-Memory™ and up to 1024GB Streaming Memory |

| Performance | 4 petaFLOPS FP16.16 1 petaFLOPS FP32 |

| IPU Cores | 23,552 |

| Threads | 141,312 |

| IPU-Fabric | 2.8Tbps |

| Host-Link | 100 GE RoCEv2 |

| Software |

Poplar TensorFlow, PyTorch, PyTorch Lightning, Keras, Paddle Paddle, Hugging Face, ONNX, HALO OpenBMC, Redfish DTMF, IPMI over LAN, Prometheus, and Grafana Slurm, Kubernetes OpenStack, VMware ESG |

| System Weight | 66kg + Host server |

| System Dimensions | 4U + Host servers and switches |

| Host Server | Selection of approved host servers from Graphcore partners |

| Thermal | Air-Cooled |

| Optional Switched Version | Contact Graphcore Sales |