Next Generation IPU Systems

IPU-M2000 + IPU-POD4

Core building blocks for AI infrastructure at scale

Buy Now

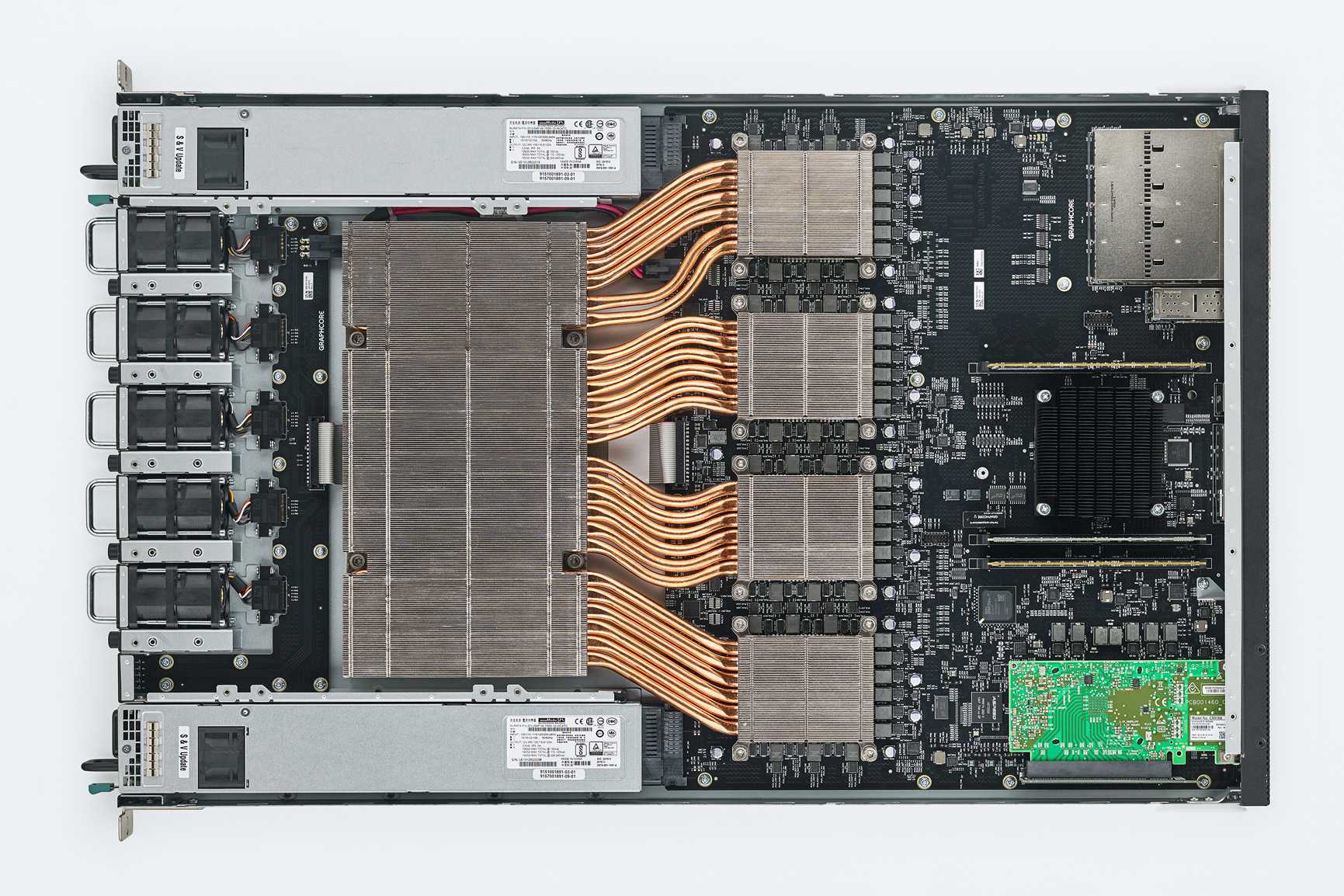

The IPU-M2000 is the fundamental compute engine for IPU-based machine intelligence, built with the powerful Colossus Mk2 IPU designed from the ground up for AI. It packs 1 petaFLOP of AI compute with 3.6GB In-Processor-Memory™ and up to 256GB Streaming Memory™ in a slim 1U blade. The IPU-M2000 has a flexible, modular design, so you can start with one and scale out to many in our IPU-POD platforms.

Directly connect one IPU-M2000 to a host server from our OEM partners, to build the entry level IPU-POD4 to start your IPU journey.

Read Analyst Report

Get started with the IPU-POD4 - the entry-level AI compute engine to start your IPU journey, combining a single IPU-M2000, directly connected to a host server, in a 2U pre-qualified system.

Watch videoAs the fundamental compute engine for all our IPU-POD platforms, the IPU-POD4 - one IPU-M2000 plus your choice of approved host servers - delivers real performance advantage against the A100 GPU in the DGX platform.

Finance firms achieve blisteringly fast time-to-result seeking alpha with Markov Chain Monte Carlo models.

Search engines get results faster for their users using Natural Language Processing models like BERT-Large.

Connect with our experts to assess your AI infrastructure requirements and solution fit.